Business Case:

Audience feedback is a key factor in evaluating the effectiveness of a conference, summit, or meeting. Capturing consistent, high-quality feedback from audience members, however, is a challenge. Long-used tools such as surveys fail to accurately gauge the actual emotion of audience members. Surveys suffer from low response rates, delayed responses, and participant bias.

Cameras and AI have been used in the past to recognize objects. The analysis was unable, however, to present any data about the object beyond a physical description. MAQ Software developed Emotion Analyzer to take object recognition a step further.

We worked with our development team, observing a company-wide meeting, to demonstrate use of our Emotion Analyzer to measure audience feedback from 300 attendees.

Key Challenges:

- Identify individual faces from a crowd of attendees captured in video footage.

- Detect emotions on faces by analyzing facial expressions.

- Translate emotion data to real-time graph to gauge overall attendee sentiment.

- Maintain privacy of individuals while collecting and analyzing video footage.

Solution:

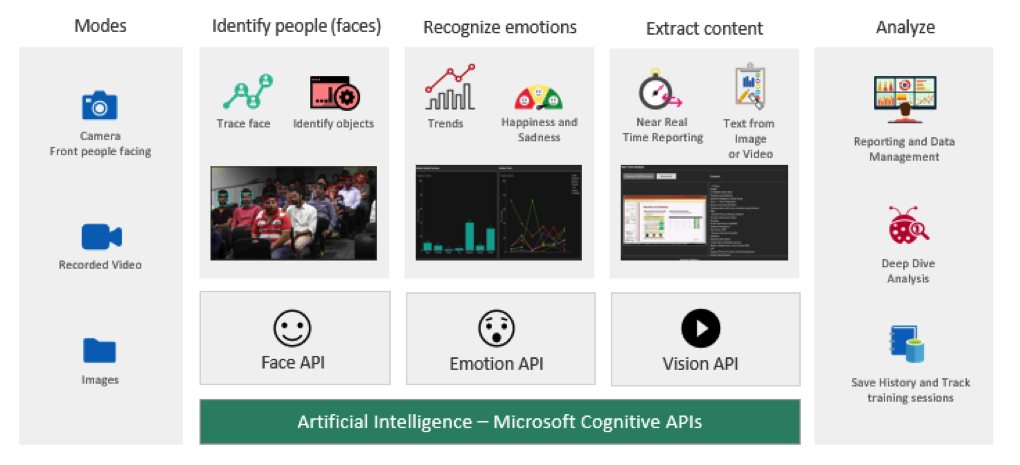

Our custom-built Emotion Analyzer can extract data from live video footage, recorded video, or static images. From the extracted data, the software recognizes faces and analyzes emotions conveyed through facial expressions. Emotion Analyzer then outputs the observed sentiment data graphically.

Key Highlights:

- Used Microsoft’s Cognitive Face API to identify sentiment person by person.

- Used Microsoft’s Emotion API to match facial patterns to emotions.

- Extracted emotion data using Microsoft’s Vision API.

Figure 1: Microsoft Cognitive APIs

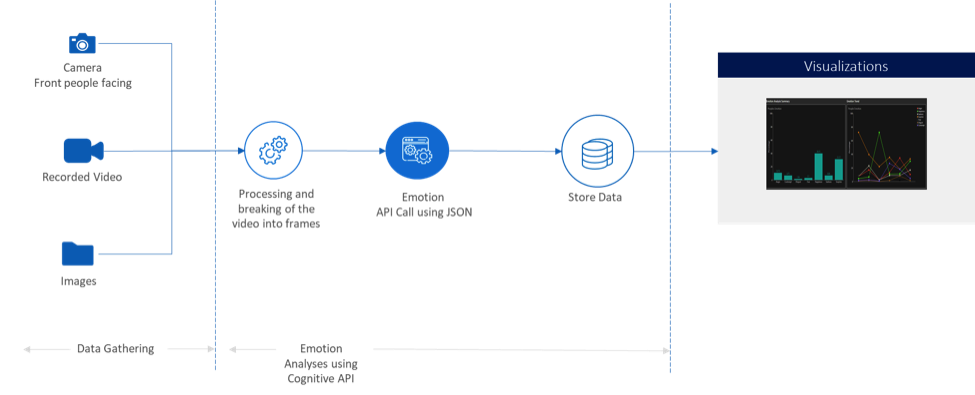

To analyze the audience sentiment, we started by breaking the video input into frames, which we gave as input to the API. The data returned from the API was captured and stored in a SQL server for deeper analysis.

Figure 2: API Flowchart

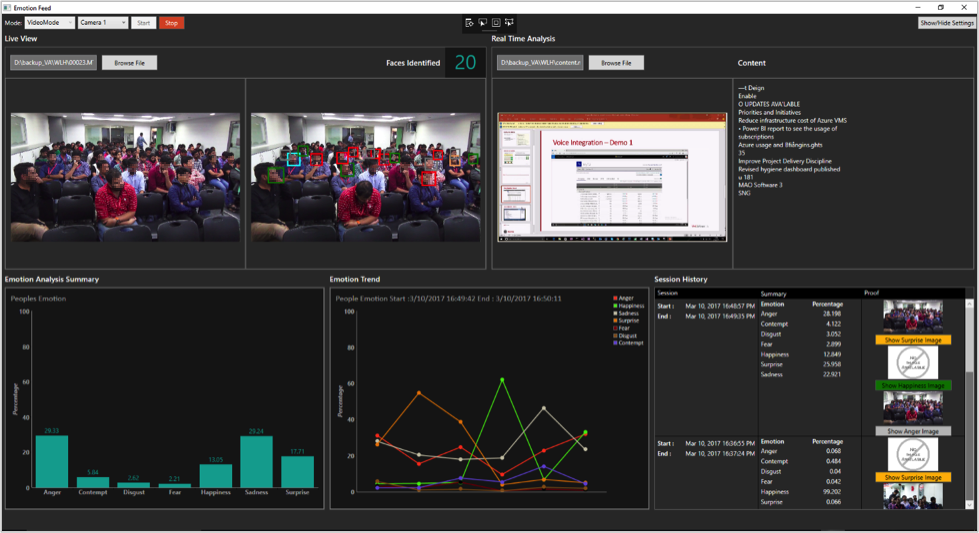

Next, the software analyzed the observed audience feedback and plotted a distribution of various emotions including anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise. These emotions are understood to be communicated cross-culturally through facial expressions and are difficult to hide or fake.

Figure 3: Distribution Analysis

To protect the privacy of audience members, Emotion Analyzer does not match faces to individuals or store facial recognition data.

Business Outcome:

Using Emotion Analyzer, we were able to observe emotional trends, such as periods when the audience happiness quotient increased. These trends allowed us to return to our presentation and cross-reference moments, pinpointing the precise topics that made our participants happy or sad.

The ability to capture and record audience sentiment allows businesses to gather unbiased feedback. With Microsoft Cognitive APIs, there is no longer a need to rely on the accuracy of cumbersome surveys.

Outcome Highlights:

- Gathered real-time feedback from facial expressions during large meetings.

- Used expression analysis to reduce the need for surveys, resulting in significant cost and time savings.

- Enabled rapid tailoring of presentations to suit audience interests and needs.